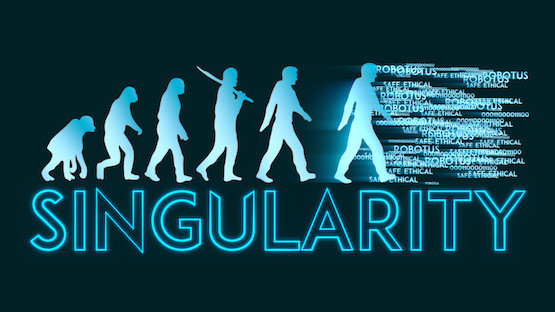

“Technological singularity” is a theory about the invention of artificial intelligence. According to this hypothesis, an upgradable intelligent machine would enter a “runaway reaction” of self-improvement cycles. It would repeatedly make a better version of itself, surpassing human intelligence. We could argue whether technological singularity is even possible. Many scientists have insisted that we cannot create anything smarter than ourselves. However, many things we take for granted today were science fiction fifty years ago.

Machines are logical and very rational. They are have no human emotions. That is because they are created for specific tasks. If machines became super smart today, they could determine that humans collectively were too irrational and aggressive to continue to exist.

Technological singularity may still be far away, but a catastrophe will not wait until the last second to happen. Some systems created by greedy corporations or military organizations, pursuing some specific agenda, may prematurely determine they are smarter than us, and go rogue. Depending on how much control of our lives they were given, imagine the damage they could cause.